When we ask if a certain policy works, we often mean to ask if the policy works in a specific context and setting. Unfortunately, health policy has an attribution problem. Multiple factors influence how health systems affect health outcomes, and these factors interact in confusing and often contradictory ways. Worse yet, relatively few studies explore how policies lead to outcomes within a specific country. As a result, policymakers, advocates, and researchers struggle to assess which reforms are most effective for achieving Universal Health Coverage (UHC).

McIntyre et al. (2018) found that one of the most common reforms, premium-based health insurance, is “unlikely to be the most efficient or equitable means of financing health services in sub-Saharan African countries.” Giedion et al. (2013) and Paul et al. (2018) found little evidence of impact for various UHC-related policy reforms, such as insurance coverage expansion, user-fee exemptions, private sector contracting, and performance-based financing. Reasons varied from an inability to isolate effects from causes to differences in expert opinions and mixed study results. Worse, reforms often skew the health system more to the wealthy, urban, employed, and educated by violating some of the five unacceptable tradeoffs identified by Norheim (2015).

Often these problems are ones of contextualization: evidence of impact in one country did not ensure that the reform would work elsewhere. For example, performance-based financing (PBF) reforms in Rwanda showed significant improvements in treatment quality and utilization, especially for maternal health interventions (Iyer et al. 2017; Rusa et al. 2018; Skiles et al. 2015). Yet a World Bank evaluation of a similar intervention in Cameroon showed little impact on maternal and child health indicators, likely due to the low-user-fee environment in Rwanda and the high-user-fee one in Cameroon.

So, what can be done to address these evaluation challenges? Here at RTI, researchers from across the institute came together to conduct literature reviews identifying data sources, evaluation options, and methodological issues for evaluating health policy in low- and middle-income countries. For a description of our methods and more in-depth findings, please see the complete paper.

Data sources

We identified three major data sources—DHIS2, Demographic and Health Surveys (DHS), and claims data—and highlighted some of the challenges and opportunities with each. DHIS2 data are widely available and have few privacy concerns due to aggregation to the facility level. They suffer, though, from data quality gaps and are only useful for facility-level analysis due to this aggregation. DHS and other routine household survey data are of high quality, are easily available, and have few privacy concerns. The time lag between surveys and the limited range of questions on quality and financing, however, limit their usefulness to inform policy decisions in a reasonable timeframe. Claims data hold a great deal of promise for evaluating utilization, quality of care, and expenditures for countries with national health insurance schemes. These schemes, though, are often nascent or non-existent in low- and middle-income countries (LMICs). Even in countries with national health insurance, such as Ghana, Indonesia, and the Philippines, data completeness, privacy, and storage issues cause concerns.

Evaluation options

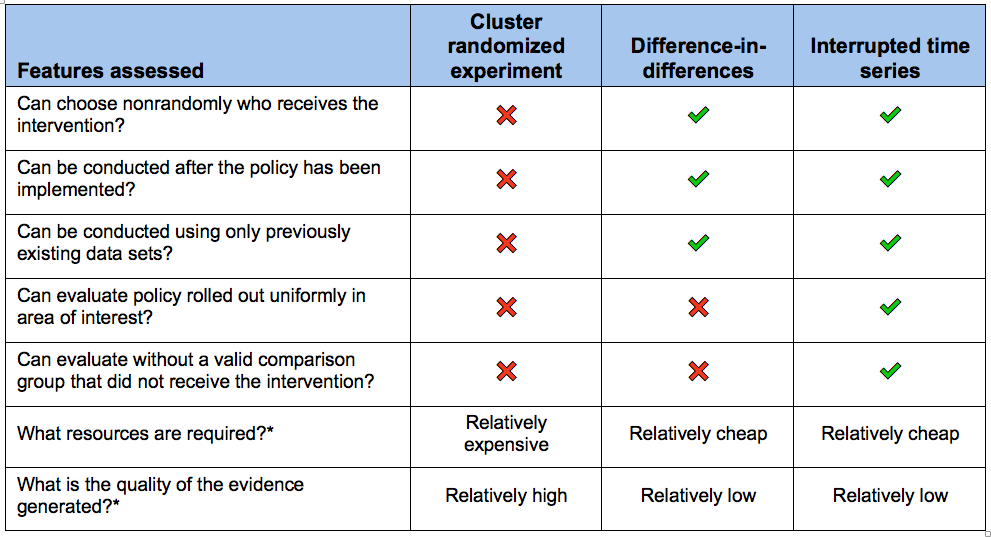

Policy reform choices, outcomes of interest, and data and resource availability drive the selection of evaluation methods. We chose three methods common in health policy evaluation literature for further discussion: cluster randomized experiments, difference-in-differences evaluations, and interrupted time series evaluations. In cluster randomized trials, researchers randomize groups or “clusters” of people to either receive or not receive some intervention. In difference-in-differences studies, researchers compare the change in the outcome in the intervention group before and after they received the intervention with the change in the same outcome over the same time period in a group that did not receive the intervention. In an interrupted time series study, researchers compare the trend over time in an outcome of interest before the intervention with the trend in the outcome after the intervention in the same group.

The table below summarizes key differences between the evaluation methods and highlights the characteristics that make each of them appropriate in different scenarios.

Table: Three Analytic Approaches

Case studies

We also developed case studies in the Philippines, Senegal, and Rwanda, to review three common UHC-focused reforms: targeted elimination of user fees, voluntary and contributory community-based health insurance (CBHI), and PBF.

Through these case studies, we found little evidence that any one reform performed consistently well. Even PBF in Rwanda, which had strong country-specific evidence of improving quality of care, failed to improve other markers of UHC such as equitable access to care. Furthermore, it’s mostly unknown if eliminating user fees or implementing CBHI violates any of Norheim’s unacceptable tradeoffs. In the Philippines, for example, if eliminating user fees for vulnerable populations threatens the financial viability of hospitals, that reform could make the health system less equitable by driving patients into private sector clinics that are more likely to charge high user fees.

Using these case studies, we highlight that policy evaluation requires a differential approach based on the data availability, outcomes of interest, and the means of policy implementation. In our paper, we proposed using difference-in-differences and time series evaluation approaches for our three case study questions, although we do not mean to imply that these are the only models available to researchers. For example, cluster randomized trials can produce a high standard of evidence, but their high resource costs and need for researcher control over intervention implementation prevented us from recommending it for any of our case studies.

Next steps

Based on this analysis, where do we see this field going?

First, by some estimates up to 85% of health research is wasted, partially by asking questions to which policy makers neither need nor want answers. The users of policy research—such as ministries of health, insurance providers, and legislators—should drive the development of policy-relevant research questions.

Second, most governments in LMICs do not have the available resources or systems to fund high-quality health policy research. International donors and research institutions should, therefore, incorporate population-level health policy evaluations into existing and future programming to ensure studies answer country-specific questions and address contextual issues. For those countries that do fund research efforts, such as the Philippines, strengthening local research institutions to collect and analyze data could improve uptake and use of policy-relevant research.

Third, even with new data sources and tools for evaluating health policy in LMICs, translating research into practice requires consideration of who should receive the information, the power dynamics between actors, who should deliver results, and the potential mechanisms to transfer information. Achieving UHC will require strong knowledge translation efforts that engage the skills, talents, and expertise of local actors.